Mastering AI Governance and Security

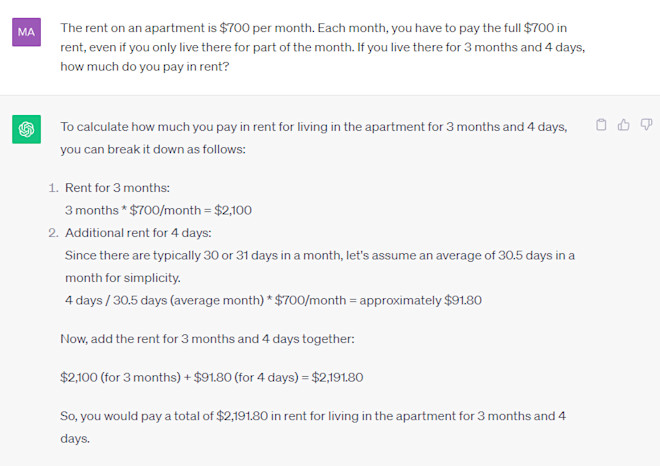

Ever played around with ChatGPT and figured something isn’t right? Couldn’t do basic maths? Or answered a question blatantly wrong? Well you are not alone.

ChatGPT tries maths

Amid all the excitement for GenAI it comes with many challenges: an increase in cyberattacks, data privacy issues, employees making decisions based on innacurate data, ethical risks, and more. We all remember the Lawyers using ChatGPT and citing fake cases in court, or the time the Air Canada chatbot gave a non-existent discount to a client buying a last-minute ticket for his grandmother’s funeral. Even the best intentions can go wrong: for example, Snapchat tried to launch a chatbot “friend”, who ended up advising 15-year-olds on how to hide alcohol for a party.

Despite these considerable risks, only 9% of companies are equipped to deal with these threats according to the Riskonnect AI report. As enterprise adoption accelerates, leaders need to seriously consider these risks.

From MLs to LLMs

Until now, businesses utilised narrow AI or Machine Learning algorithms (ML), designed to perform specific tasks such as voice assistants like Siri or recommendation systems like Netflix. ML paved the way for the creation of huge companies such as Weights & Biases that specialise in enhancing ML performance through experimenting, monitoring, and security.

Now, the accelerated adoption of LLMs confronts enterprises with a new set of risks, policies, and governance frameworks. These include:

LLMs are black boxes with responses based on probabilistic predictions about word sequences. This can lead to unpredictable results.

LLMs are only as good as the data they are trained on. Therefore, they can perpetuate biases present in their training data.

LLMs introduce new security risks as they use a lot of information, including sensitive data for their extensive training requirements. This raises the potential for unintended data leaks and also exposes systems to vulnerabilities such as prompt injection attacks.

These challenges are a major blocker for businesses that are looking to deploy GenAI tools into their business operations and a significant opportunity for new entrants to develop robust infrastructures, advanced tools, and governance practices.

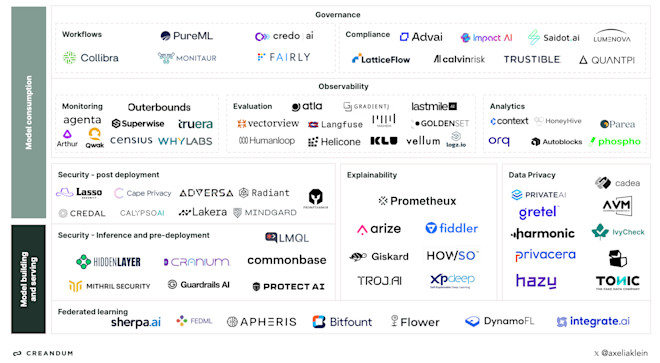

The disruptors

The AI landscape is full of companies eager to tackle these challenges covering the entire model lifecycle. We have identified some of those we are excited about within six critical areas:

Governance

Workflows: Helping companies create AI inventories to document their LLMs and stakeholder managment to understand who has access to the models, make changes, etc. Fairly provides a control centre where companies can document, mantain and track all AI projects for continuous visibility.

Compliance: Ensuring that LLM applications adhere to ethical, legal and regulatory standards. Saidot AI assesses and mitigates potential risks, provides AI policy best practices and ensures the model is compliant with regulation.

Observability

Monitoring: Understanding the behaviour of LLMs to ensure that generated content aligns with desired outcomes. Arthur helps teams monitor and measure data drifts to improve accuracy.

Evaluation: Test and compare the performance of models to improve, accuracy, reliability and performance. Atla AI helps developers assess the risks and capabilities of their models by understanding vulnerabilities, build safety guardrails and create reliable AI applications.

Analytics: Understanding how users utilise LLMs to optimise the model. Orq AI collects quantitative and qualitative end-user feedback to gain insights on how the model is performing.

Security

Inference and pre-deployment: Implementing security measures such as vulnerability assessments and data sanitisation before the model goes live. Protect AI secures LLMs with build-in sanitisation to prevent data leakage and prompt injection attacks.

Post deployment: Protect the model against new vulnerabilities and respond effectively to any security incidents. Mindgard AI safeguards AI models by detecting and responding to threats.

Explainability

Ensuring the decision-making process of LLMs are transparent and understandable. Arize provides insights into how LLMs arrive at their outcomes to mitigate the risks of potential model bias.

Data privacy

Safeguarding user data generated or processed by LLMs. Private AI detects and anonymises personal information on models.

Federated learning

Enables training models across decentralised devices while keeping data localised. Flower AI is a federated learning framework that allows multiple parties to collaborate on model training without sharing raw data.

Note: Most companies are tackling multiple concerns. We purposefully categorised the companies into a specific area based on what we believe they excel at. For example, Lakera’s main focus is to create GenAI applications securely but it also analyses for harmful content and model misalignment. Atla AI is a member of the Creandum funds' portfolio.

Our AI governance market map

The EU AI act is fuelling adoption of governance tools

With the impending activation of the EU AI Act, companies need to make sure they are compliant or face the risk of a fine of the higher of €20m or 4% of their annual revenue. The increasing regulatory pressure encourages the development of new technologies that can facilitate workflow governance, compliance, and ethical AI practices.

We are particularly excited about companies that are focusing on building responsible AI and managing risks. Trustible translate high-level policy requirements into actionable controls for technical teams and ensure that AI applications align to regulations, internal policies and industry standards.

We are also seeing companies helping address governance challenges and ensuring compliance with regulations and ethical guidelines. Some are focusing on building catalogues for the models and helping teams work together. Monitaur brings visibility to a models’ lifecycle and provides tools to orchestrate across teams and stakeholders.

Empowering AI with real-world feedback

We are witnessing lots of innovation in the observability space. The market map highlights three key areas: evaluating model performance, monitoring accuracy and understanding how end-users interact with the model.

There are specialised solutions such as Cencius that monitors for data drifts and sends real-time alerts so engineers can quickly improve the model. There are also companies aiming to offer the complete suite tackling all three use cases. Take Klu, which evaluates the model which works best, monitors them for accuracy and understands user preference, allowing companies to fine-tune custom models.

We know GenAI can do incredible things, but it can be frustrating for people which is why we are interested in companies focusing on user behaviour and looking to improve models based on real interactions and human-feedback. Context captures user feedback to get details on application performance and points out how to enhance the model. For more sensitive industries which cannot wait until a user complains, there are companies such as Maihem which creates realistic personas to interact with conversational AI, offering a proactive approach for businesses eager to improve with “user” feedback.

Securing the future of AI (and your data)

The biggest concerns when implementing GenAI are related to security and data breaches. Some companies, such as Samsung have even banned its employees from using ChatGPT after an engineer uploaded sensitive internal code.

Despite these concerns, we are believers in choosing innovation over restriction. It is encouraging to see how many others agree and are stepping up to tackle these challenges. Numerous companies are emerging to safeguard LLM assets from unauthorised access, data breaches, and other security threats. There are different security measures to consider when building the model and when consuming it. Cranium connects to the models and secures LLMs against threats without interrupting workflow. Lasso Security analyses which models are being used in the organisation, detects if employees are sending or receiving risky data and blocks malicious attempts.

What is next?

Open source (or close your business)

Close source models, dominated by a few big players, have raised concerns about lack of transparency, biases, and platform risks. In the last months, we have witnessed the growth of open-source models, led by Mistral AI that is reshaping the landscape of software development and deployment, marking a shift towards more open, collaborative technological landscapes.

The distribution of open source models will only accelerate going forward, driven by companies’ desire to “own” their models and take advantage of the flexibility, potential gains in cost, latency and accuracy by running smaller, more specialised instances. However, this flexibility that makes open source attractive also means a stronger need for robust tooling ecosystem. Because open-source projects are developed and maintained by communities rather than a single entity, coordination and quality control become more challenging. Stronger tools are needed for governance: version control, code review, continuous integration, etc. Additionally, the openness of these models makes it simpler for malicious actors to identify and exploit vulnerabilities, so strong data privacy and security tools are needed for open source models.

AI meets privacy

As concerns around data privacy and security grow, federated learning emerges as a powerful solution. Federated learning enables models to be trained directly on devices or local servers, without the need to centralise the data. This approach safeguards personal and sensitive information.

The adoption of federated learning has the potential to revolutionise sectors like healthcare, finance and government, where data privacy regulations such as Europe's GDPR pose significant barriers to traditional AI implementations. Companies like Bifount bring AI directly to your data, which remains behind a firewall at all times. Use cases include accelerating clinical trials and fighting financial crime while ensuring compliance and control.

As we navigate the complexities of modern data usage, federated learning stands out as a key solution for privacy sensitive industries. It represents a crucial step towards creating a future where the benefits of AI can be realised without compromising individual privacy or security.

AI agents running wild

As we see more companies focusing on building autonomous AI agents rather than only focusing on input/output, a new set of challenges arises. While LLMs primarily process and generate text, AI agents based on those are able to take various inputs, make decisions and produce actions in dynamic environments. LLMs rely on unsupervised learning techniques and operate within predefined tasks, while AI agents utilise a combination of learning paradigms, including reinforcement learning and exhibit autonomy by adapting to changing conditions.

Current approaches emerging in the space are employing access controls and encryption techniques that can safeguard sensitive data and prevent unauthorised access to the agent's systems and communication channels. We only see these becoming increasingly relevant, both in more verticalised enterprise use cases but also for the multiple players aiming to build AGIs via autonomous agents.

Evolution or extinction

The wide adoption of GenAI by enterprises is a reality. Beyond mere automation, AI is becoming a core component of digital transformation strategies, driving efficiencies, enabling data-driven decision-making, and unlocking new opportunities for growth.

However, simply plugging in GenAI is not enough. Without a comprehensive strategy that includes robust GenAI tooling, all efforts may result in only short-lived success. Companies that overlook this aspect of GenAI integration are sensitive to ineffective applications, exposing them to risks and potentially making them obsolete.

Fortunately, there are numerous companies stepping up to ensure businesses can harness GenAI’s full potential. These companies are assisting with data training, data privacy, security, observability and governance and are key partners for business growth.

At Creandum, we look for products and solutions capable of delivering a 10x better experience. GenAI tooling will change the way businesses operate and grow, so if you are a company building in this space and want to chat, let us know!

Useful links

Usage & history:

AI infrastructure:

LLM tooling: